Have you ever encountered text that looks like a jumbled mess of characters, seemingly indecipherable and frustrating to read? The seemingly simple task of displaying text can become a complex battleground of character encodings, leading to what's often referred to as "mojibake" or garbled text, that plagues digital communication.

The digital world relies on character encodings to represent text. These encodings are essentially systems that map characters to numerical values, allowing computers to store and process text. However, when the encoding used to display text doesn't match the encoding in which the text was originally written, chaos ensues. This mismatch results in the substitution of expected characters with a sequence of seemingly random characters, making the text unreadable.

One common issue arises with the use of special characters, such as accented letters (, , ), diacritics, and symbols. Different languages and systems employ varying encodings to represent these characters. When these different encodings are not correctly interpreted, the special characters are often replaced with a string of unfamiliar symbols, obscuring the original meaning of the text. The resulting output is a confusing sequence that, rather than conveying information, frustrates the reader and hampers communication.

Consider the Spanish sentence "Cuando hacemos una pgina web en utf8, al escribir una cadena de texto en javascript que contenga acentos, tildes, ees, signos de interrogacin y dems caracteres considerados especiales, se pinta..." Imagine that this sentence, when rendered, transforms into a series of unintelligible characters. The user would see not the original sentence, but a series of sequences such as \u00e3\u00a2\u00e2\u201a\u00ac\u00eb\u0153yes\u00e3\u00a2\u00e2\u201a\u00ac\u00e2\u201e\u00a2 instead of the intended words. This is a common symptom of character encoding problems.

Another frequently seen problem arises from incorrect interpretations of apostrophes or spaces. Instead of the expected apostrophe, you might encounter \u00e3\u0192\u00e2\u00a2\u00e3\u00a2\u00e2\u20ac\u0161\u00e2\u00ac\u00e3\u00a2\u00e2\u20ac\u017e\u00e2\u00a2. The same is true for spaces after periods, being swapped with sequences like \u00e3\u201a or \u00e3\u0192\u00e2\u20ac\u0161. It's also worth highlighting the situation where data is exported from a server through an API, where the encoding is not displaying the proper character. In this scenario, the data is stored, but its readability is compromised.

| Issue | Description | Common Causes | Solutions |

|---|---|---|---|

| Character Encoding Mismatch | Text displayed incorrectly due to encoding differences. | - Incorrectly set encoding in a text editor or database. - Data transferred with the wrong encoding. - Application not recognizing the source encoding. | - Specify the correct encoding when saving or opening files. - Convert the text to the correct encoding (e.g., UTF-8). - Ensure your application handles the source encoding correctly. |

| Double Encoding | Characters are encoded multiple times, resulting in a strange sequence of characters. | - Encoding applied twice during conversion or data transfer. - Incorrect handling of character encoding by software. | - Decode the text once to remove the extra encoding. - Check how the text is being encoded at each step. - Inspect the source and target encodings to fix the sequence. |

| Incorrect Character Interpretation | Specific characters (like special characters and symbols) rendered incorrectly. | - Using the wrong encoding for a specific set of characters. - Software not correctly recognizing characters. | - Verify and correct encoding in all parts of the process. - Choose the proper encoding that supports these characters (e.g., UTF-8). - Make sure your software is updated to handle the characters. |

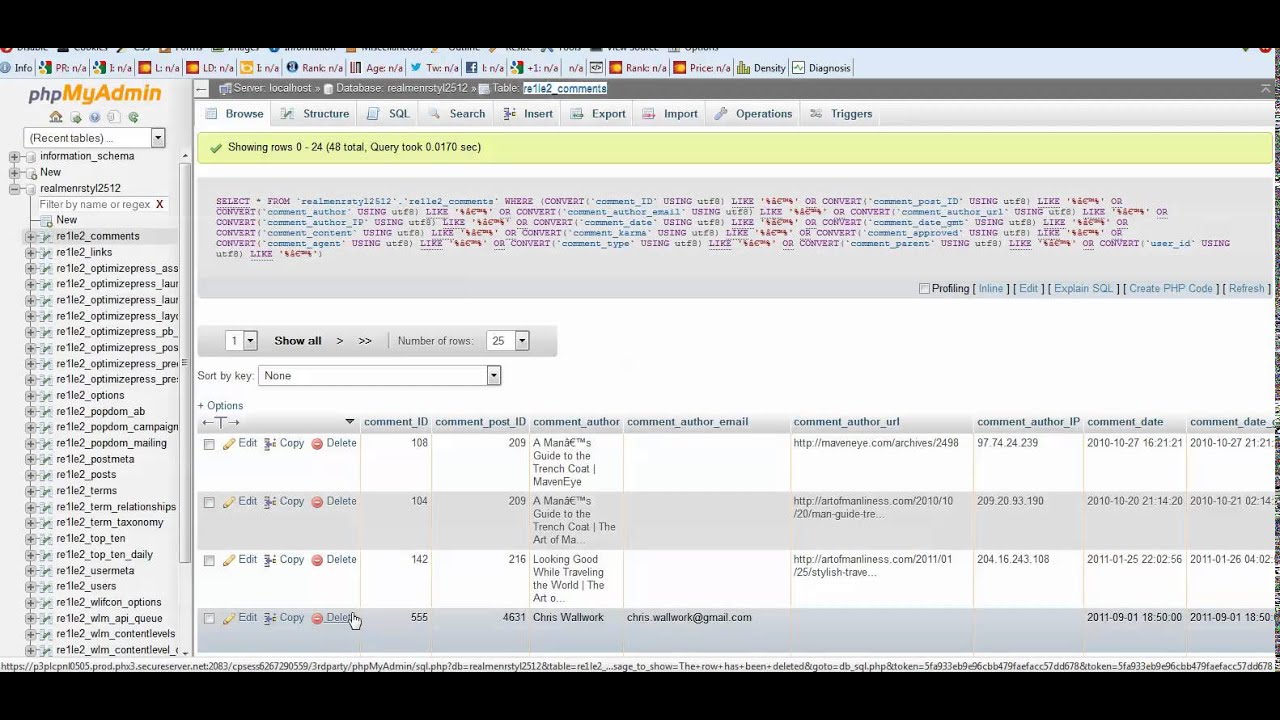

The challenge of managing character encodings extends beyond mere aesthetic concerns. It can significantly impact the usability and functionality of applications, websites, and databases. Information retrieval, text processing, and data storage all depend on the accurate handling of character encodings. When encoding issues are present, this can lead to incorrect search results, broken data parsing, and a general breakdown in communication.

One individual identified a practical method to overcome this issue. Their solution involved converting text to binary and subsequently transforming it into UTF-8. This approach offers a workaround when the source text suffers from encoding issues. Although the specifics of this approach are not explicitly outlined, it signifies a hands-on solution to a pervasive problem. The process of converting text to binary and then encoding it to UTF-8 can often resolve issues stemming from encoding mismatches. However, applying this process requires understanding the nuances of different character encodings and how to manipulate them appropriately.

In a broader context, the issue of mojibake showcases the importance of character encoding as a fundamental component of digital communication. If the encoding is not correct, the special characters are often replaced with a string of unfamiliar symbols, obscuring the original meaning of the text. The exported data contains special characters (such as , , , etc.). The spaces after periods are being replaced with either \u00e3\u201a or \u00e3\u0192\u00e2\u20ac\u0161; Apostrophes are being replaced with \u00e3\u0192\u00e2\u00a2\u00e3\u00a2\u00e2\u20ac\u0161\u00e2\u00ac\u00e3\u00a2\u00e2\u20ac\u017e\u00e2\u00a2. When this happens, the integrity of information, and with it, the user experience, is lost.

The complexities of these scenarios remind us of the need for rigorous attention to detail when working with text data. Solutions often require careful investigation of the original encoding and the target encoding, as well as potentially a series of conversions and adjustments. Specialized libraries such as "ftfy" (fixes text for you) are created to automate and simplify the process. Using the ftfy library, you can fix_text and fix_file, and address encoding issues in a more automated way.

Let's consider, for example, the challenges in processing data from a data server through an API, where the data saves in .csv file but the encoding is not displaying proper character. This means when one retrieves information from a data server to local machine, the character might not be shown as the way the data is saved in the server. It underscores the importance of paying close attention to data processing and encoding at every step. These types of issues show how even subtle errors in character encoding can dramatically affect the meaning and usability of information.

It's also important to have some knowledge of the latin alphabet's characters, such as "Latin capital letter a with circumflex.", "Latin capital letter a with tilde.", and "Latin capital letter a with ring above.". They are sometimes part of the root cause for the encoding issues. In addition, understanding the nuances of different character encodings, like UTF-8 and others, is critical. UTF-8, for instance, is a variable-width character encoding capable of encoding all Unicode characters.

In essence, accurate handling of character encoding is essential to ensure the integrity and readability of textual data. As the digital landscape continues to evolve, a firm grasp of this essential element will become even more important.